- Videos

- Meta’s MMS – Speech2Text & Text2Speech

Meta’s MMS – Speech2Text & Text2Speech

Meta's MMS project enables machines to recognize and produce speech in over 1,000 languages using New Testament translations for data sets. The project has scaled speech to text and text-to-speech to support over 1,100 languages, covering 10 times more languages than any existing speech recognition or speech generation model.

Breaking News: Meta's MMS Project Breaks Language Barriers with Recognition in over 1,100 Languages

Topics

- Massively multilingual speech project

- Break down language barriers

- Using New Testament translations for data sets

Video summary

Meta has shared new progress on their AI speech work with the massively multilingual speech project (MMS) which has now scaled speech to text and text-to-speech to support over 1,100 languages. This project aims to break down language barriers by enabling machines to recognize and produce speech in over 1,000 languages, covering 10 times more languages than any existing speech recognition or speech generation model. The biggest challenge in creating a multi-modal system for speech recognition and speech generation is finding the vast amounts of label data necessary for training such models, but the MMS project overcomes this by using the New Testament translations which have audio recordings of people reading the text in different languages to create data sets. The results show that MMS models outperform existing models and cover 10 times as many languages.

Video transcript

hi guys and welcome to the voice of AI

my name is Chris Plante and some super

cool big big news from yesterday meta

have shared new progress on their AI

speech work the massively multilingual

speech project has now scaled speech to

text and text-to-speech to support over

1 100 languages which is a 10 times

increase from previous work MMS is a

game changer for the world of speech

recognition and speech generation

technology this project led by meta aims

to break down language barriers by

enabling machines to recognize and

produce speech in over 1 000 languages

covering 10 times more languages than

any existing speech recognition or

speech generation model with this

multi-model system people from all over

the world can access information and

communicate effectively in their

preferred language preserving linguistic

diversity across the globe one of the

biggest challenges in creating a

multi-modal system for speech

recognition and speech generation is

finding the vast amounts of label data

necessary for training such models

for most languages such data simply

doesn't exist making it almost

impossible to develop good quality

models for speech tasks however the MMS

project overcomes these challenges by

using the New Testament translations

which have audio recordings of people

reading the text in different languages

to create data sets of readings in an

over 1 100 languages this combined with

unlabeled audio recordings from various

Christian religious readings provides

label data for 1100 languages and

unlabeled data for nearly 4 000

languages the results of this project

shows that MMS models outperform

existing models and cover 10 times as

many languages their models also perform

equally well for male and female voices

let's have a quick look at the data okay

so the first graph shows character error

rates this is an analysis of potential

gender bias automatic speech recognition

models trained on MMS data have a SIM

error rate for male and female speakers

based on the fleur's benchmark and now

here on character error raids meta

trained multilingual speech recognition

models on over 1 100 languages as the

number of languages increases

performance does decrease but only

slightly moving from 61 to 1107

languages increases the character error

rate by only about 0.4 but it increases

the language coverage by over 18 times

if we look here in the word error rate

you can see in a light for like

comparison with open ai's whisper meta

found that models trained on MMS data

achieve half the word error rate but MMS

covers 11 times more languages this

demonstrates that their model can

perform very well compared to the best

current speech models and finally the

error rates in percentage meta trained a

language identification model for over 4

000 languages using their data sets as

well as existing data sets such as flurs

and common voice and evaluated it on the

fleurs language identification task it

turns out that supporting 40 times the

number of languages still results in a

very good performance furthermore the

MMS project provides code and models

publicly so that other researchers can

build upon their work and make

contributions to preserve the language

diversity in the world while the models

aren't perfect and run the risk of

mistranslating Select words or phrases

the project recognizes the importance of

collaboration within the AI Community to

ensure that AI Technologies are

developed responsibly this project has

the power to make significant impact on

the future of communication breaking

down the language barriers that have

historically existed and encouraging

people to preserve their languages the

vision is to create a world where

technology can understand and

communicate in any language effectively

preserving linguistic diversity while

this is really the beginning the

massively multilingual speech MMS

project represents a significant step

towards achieving this goal with the

advancement of Technology the future is

looking bright for communication across

languages and cultures

thanks for watching the video today

please don't forget to like And

subscribe and join me on the journey to

unlock the potential of AI

if you have any questions or any

feedback please leave them in the

comments section below I'll be happy to

read them so I'll see you next time and

all the very best I'm Chris from the

voice of AI cheers and bye-bye now

foreign

Dig Deeper

Meta's Multilingual Speech Project: Breaking Down Language Barriers

Yesterday, Meta announced the progress on their AI speech work, the Multilingual Speech (MMS) project, which now supports over 1,100 languages, a ten times increase from previous work. The MMS project aims to enable machines to recognize and produce speech in more than 1,000 languages to break down language barriers and preserve linguistic diversity across the globe.

How MMS Overcomes Language Data Challenges

One of the biggest challenges in creating a multi-modal system for speech recognition and generation is finding the vast amounts of label data necessary for training such models. For most languages, such data simply doesn't exist. However, the MMS project overcomes these challenges by using the New Testament translations to create data sets of readings in over 1,100 languages. Additionally, unlabeled audio recordings from various Christian religious readings provide label data for another nearly 4,000 languages.

MMS Outperforms Existing Models

The results of this project show that MMS models outperform existing models, covering ten times as many languages while performing equally well for male and female voices. Models trained on MMS data achieve half the word error rate compared to those of Open AI's Whisper and cover 11 times more languages. As the number of languages increases, the MMS models' performance decreases slightly by only about 0.4. However, it increases the language coverage by over 18 times.

The Future of Communication Across Languages and Cultures

The MMS project provides code and models publicly, enabling other researchers to build upon their work and make contributions to preserving language diversity in the world. The project recognizes the importance of collaboration within the AI community to ensure that AI technologies are developed responsibly. With the advancement of technology, the future is looking bright for communication across languages and cultures, with the vision to create a world where technology can understand and communicate in any language effectively.

Impact on Customer Support

While there is no direct relation to customer support, the MMS project's impact on breaking down language barriers and enabling machines to recognize and produce speech in over 1,000 languages can have a significant impact on providing customer support to individuals who speak different languages. With this multi-model system, companies can communicate effectively in their customer's preferred language, thus providing better customer support and enhancing the overall customer experience.

Video description

Well, here is some super exciting news from yesterday! Meta's Massively Multilingual Speech project has made impressive progress in enabling machines to recognize and produce speech in over 1,100 languages, covering ten times more languages than any existing speech recognition or speech generation model. It's heartening to see that Meta is working towards breaking down language barriers and enabling people from all over the world to access information and communicate effectively in their preferred language.The MMS project has addressed a significant challenge in creating a multi-modal system for speech recognition and generation by using Bible translations to create a dataset of readings in over 1,100 languages, combined with unlabeled audio recordings from various religious readings, providing labeled data for 1,100 languages and unlabeled data for nearly 4,000 languages.

What's exciting is that Meta has provided code and models publically so that other researchers can build upon their work and make contributions to the preservation of language diversity. This is an important step towards creating a world where technology can understand and communicate in any language, effectively preserving linguistic diversity. Overall, the Massively Multilingual Speech (MMS) project represents a significant step towards achieving this goal.

The Massively Multilingual Speech (MMS) project is an incredible advancement in the field of artificial intelligence and language technology. The project's ability to recognize and produce speech in over 1,100 languages is revolutionary and has the potential to make a significant impact on how people communicate across the world.

One of the most significant challenges in creating a multi-model system for speech recognition and speech generation is finding the vast amounts of labeled data necessary for training such models. The MMS project overcomes this challenge by using the New Testament translations, providing labeled data for 1,100 languages and unlabeled data for nearly 4,000 languages. The results of this project show that the MMMS models outperform existing models and cover ten times as many languages.

This project has the power to make a significant impact on the future of communication, breaking down the language barriers that have historically existed and encouraging people to preserve their languages. The ability to communicate effectively in any language will positively affect global commerce and cultural exchange.

Moreover, the MMS project provides code and models publicly so that other researchers can build upon their work and make contributions to the preservation of language diversity in the world. The project recognizes the importance of collaboration within the AI community to ensure that AI technologies are developed responsibly, which is crucial for promoting trust and avoiding unintended consequences.

The MMS project represents a significant step towards achieving the goal of creating a world where technology can understand and communicate in any language, effectively preserving linguistic diversity. It holds the potential to change the way the world thinks about cross-linguistic communication. With the advancements of technology, the future is looking bright for communication across languages and cultures.

In conclusion, the MMS project signifies a fantastic achievement in the field of artificial intelligence and language technology. It will undoubtedly impact the future by breaking down language barriers and promoting linguistic diversity. It is exciting to think about the potential positive implications of this project and other advancements in AI technology in facilitating an inclusive global conversation.

Source:https://ai.facebook.com/blog/multilingual-model-speech-recognition/?utm_source=twitter&utm_medium=organic_social&utm_campaign=blog&utm_content=card

Source: Github: https://github.com/facebookresearch/fairseq/tree/main/examples/mms

Source Meta Video: https://twitter.com/i/status/1660722199395704852

Source: FLEURS: Few-shot Learning Evaluation of Universal Representations of Speech

https://arxiv.org/abs/2205.12446

Source: Robust Speech Recognition via Large-Scale Weak Supervision: https://arxiv.org/abs/2212.04356

Meta MusicGen Review – Generative AI Music Model

The video explores AI music generation with Meta's Music Gen, an AI tool that uses deep learning algorithms to create original music based on data sets of existing music. The tool allows users to input prompts such as genres or descriptions of famous musicians to generate new music that replicates their style. The video showcases the tool's ability to generate music in genres like rock, hip-hop, jazz, country, and electronic, as well as recreating the styles of Queen, Guns and Roses, and Oasis. Overall, the video provides an entertaining and informative look at the potential of AI in music creation.

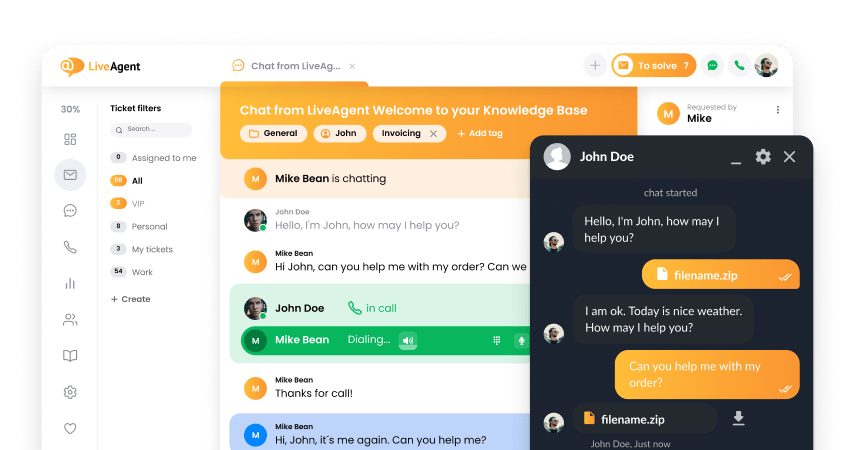

LiveAgent is a versatile customer service software that offers a range of features including ticketing system, multiple customer portals, knowledge base, search widgets, mobile help desk apps, cryptography and multiple data centres. Password validators, SSO and billing management are also available. Moreover, LiveAgent integrates with various other platforms such as Slack, WordPress, Drupal and SquareSpace. Other services include CTI, CRM, eCommerce and email marketing. Migration plugins are also available to import data from previous help desk systems.

Migrating from Vocalcom to LiveAgent?

LiveAgent offers a comprehensive customer service software with features such as ticketing, automation, and 24/7 support. It also provides integrations for social media platforms like Twitter, Instagram, and Viber. The software is highly rated and used by well-known companies like Huawei and BMW. LiveAgent offers different pricing plans to cater to the needs of small, medium, and large businesses. The platform also supports data migration from various other solutions and provides a 30-day free trial.

You will be

in Good Hands!

Join our community of happy clients and provide excellent customer support with LiveAgent.

Our website uses cookies. By continuing we assume your permission to deploy cookies as detailed in our privacy and cookies policy.

- How to achieve your business goals with LiveAgent

- Tour of the LiveAgent so you can get an idea of how it works

- Answers to any questions you may have about LiveAgent

Български

Български  Čeština

Čeština  Dansk

Dansk  Deutsch

Deutsch  Eesti

Eesti  Español

Español  Français

Français  Ελληνικα

Ελληνικα  Hrvatski

Hrvatski  Italiano

Italiano  Latviešu

Latviešu  Lietuviškai

Lietuviškai  Magyar

Magyar  Nederlands

Nederlands  Norsk bokmål

Norsk bokmål  Polski

Polski  Română

Română  Русский

Русский  Slovenčina

Slovenčina  Slovenščina

Slovenščina  简体中文

简体中文  Tagalog

Tagalog  Tiếng Việt

Tiếng Việt  العربية

العربية  Português

Português